CloudPilot AI Response to Datadog’s State of Containers Report

The newly released State of Containers and Serverless report by Datadog confirms a harsh reality: while cloud adoption is maturing, efficiency is lagging behind.

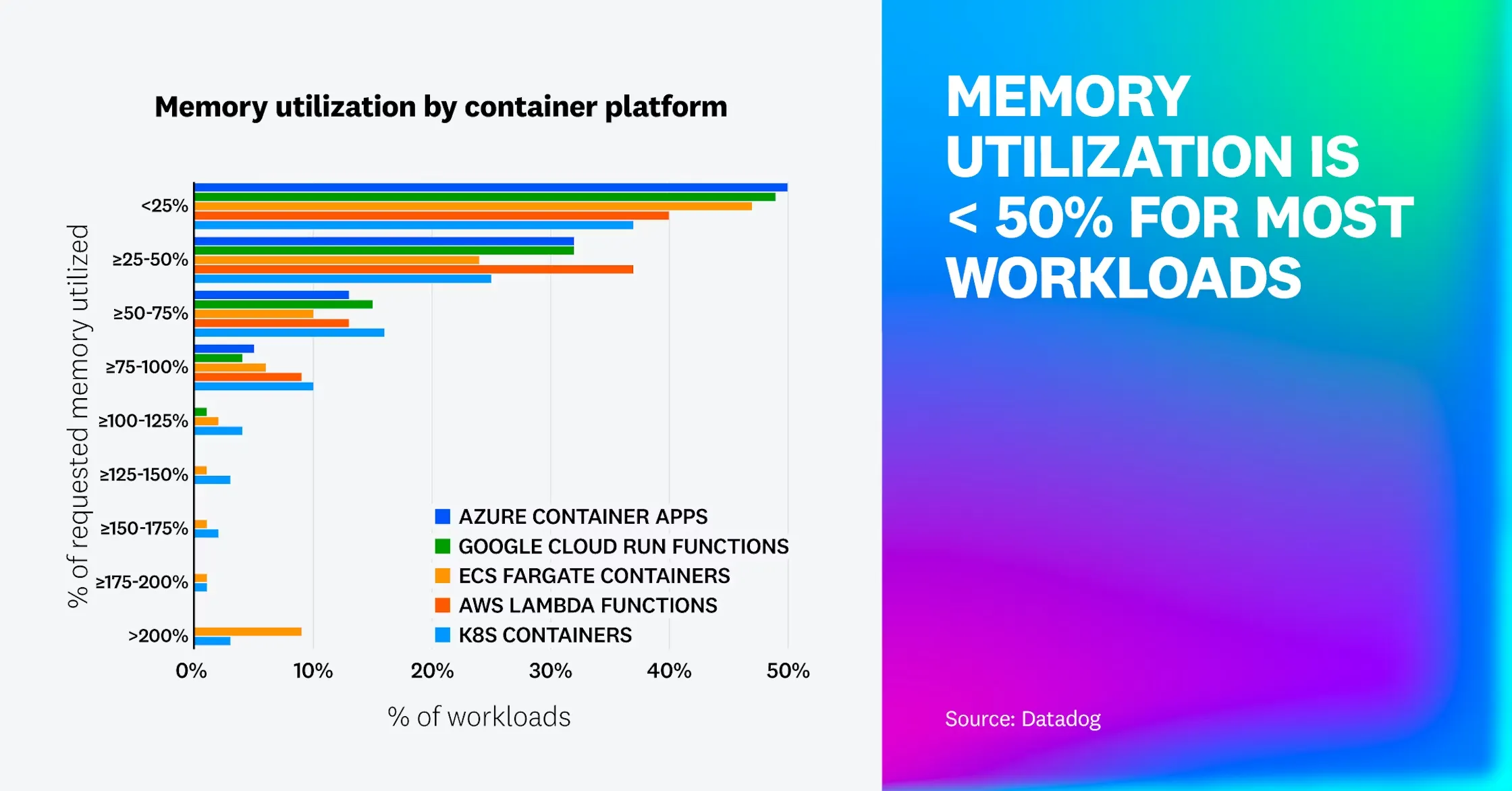

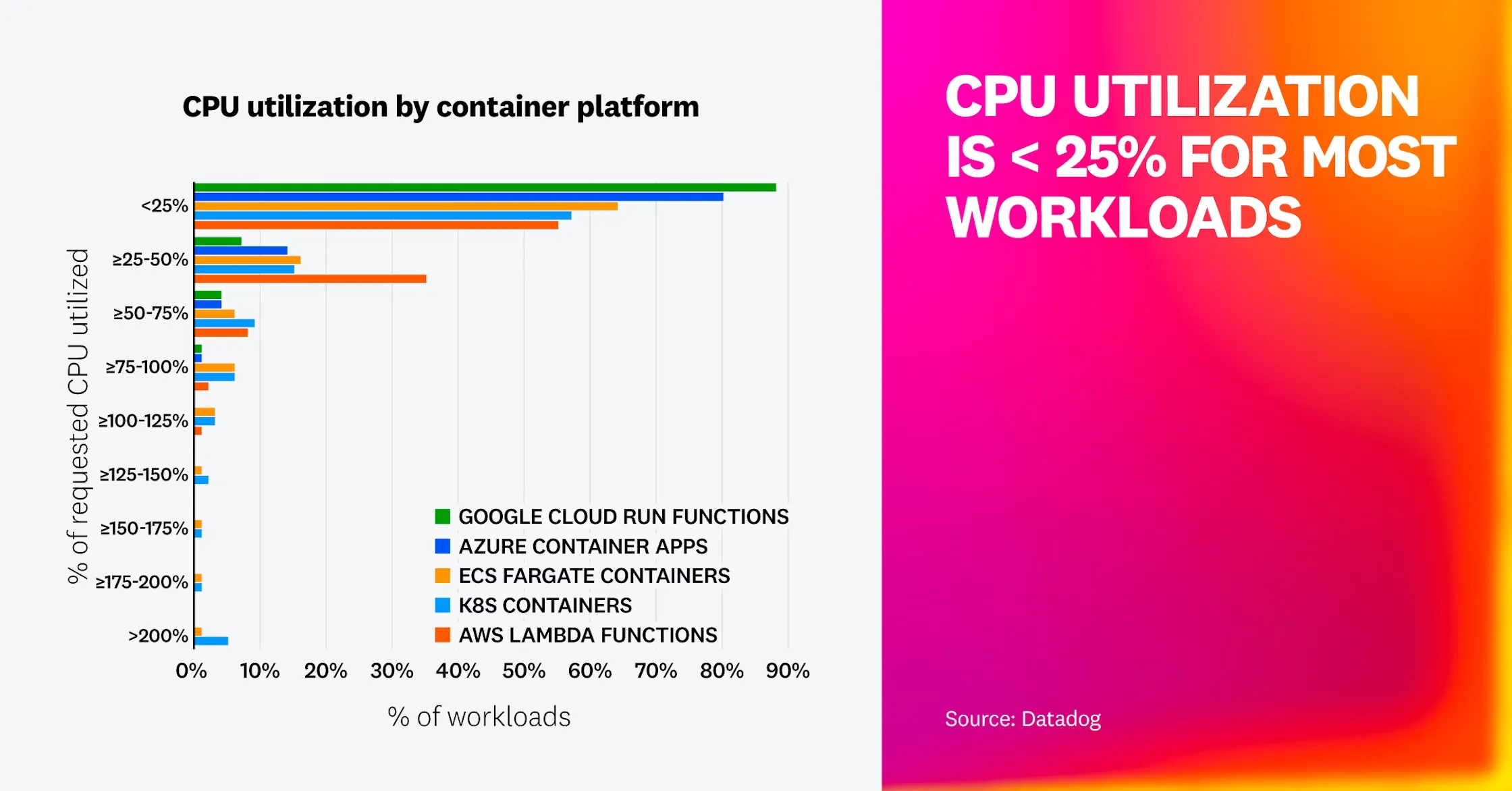

The data is striking: Despite the sophistication of modern infrastructure, the majority of workloads utilize less than 50% of their requested memory and less than 25% of their requested CPU.

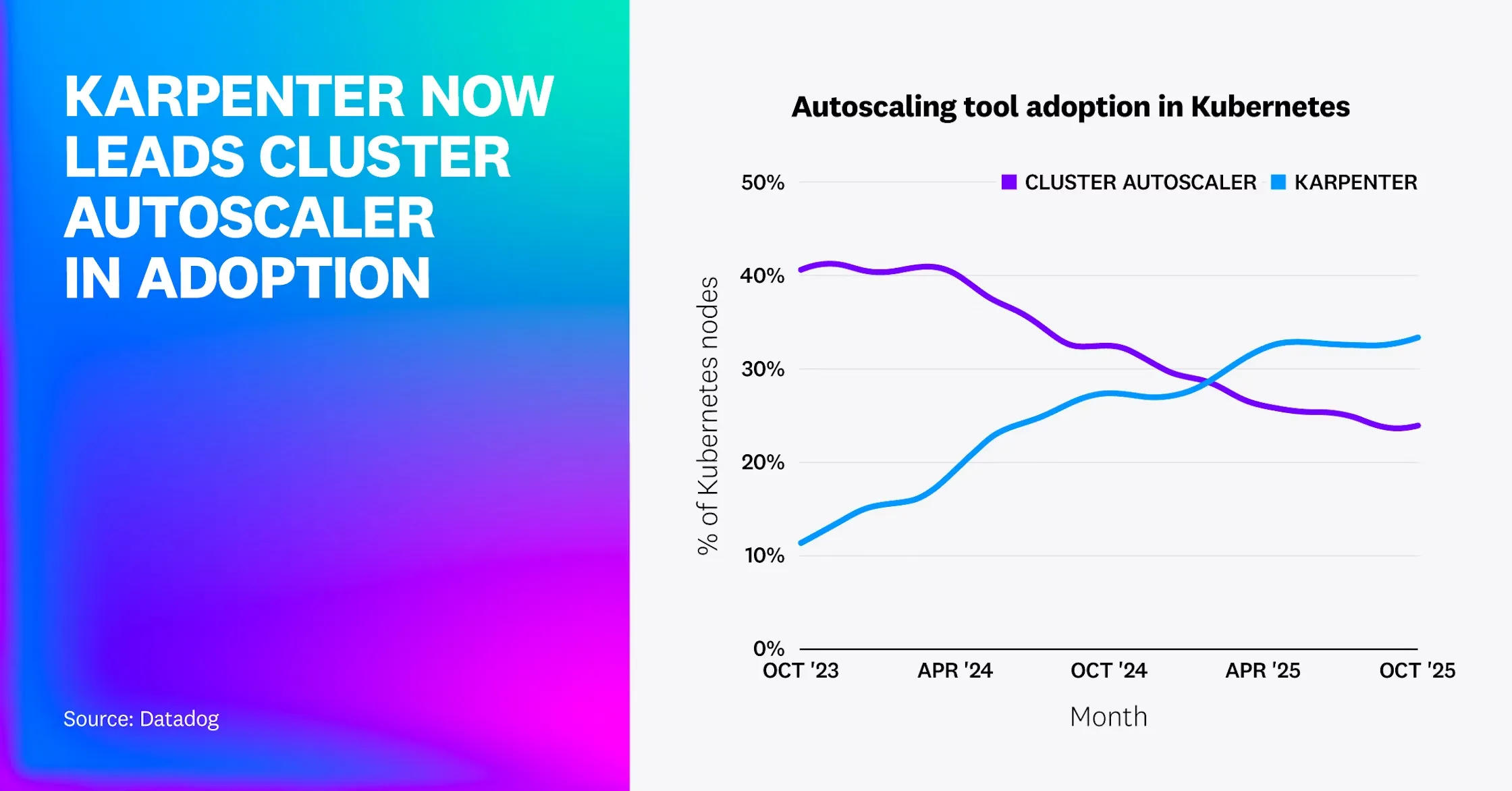

While the industry is adopting better tools—like the rise of Karpenter over the Cluster Autoscaler—tooling alone isn't solving the waste problem.

As maintainers of the Karpenter project, the team at CloudPilot AI has a unique vantage point. We understand where the current ecosystem excels, but more importantly, we see exactly what is missing.

Here is how CloudPilot AI addresses the key findings from the report with industry-leading innovation.

1. The Karpenter Evolution: Why Node Autoscaling is Only Half the Battle

The Datadog Finding

Karpenter adoption has surged by 22%, overtaking the Kubernetes Cluster Autoscaler to become the new standard for node provisioning.

The Reality Check

At CloudPilot AI, we champion this shift. We know Karpenter inside and out because we help build it. However, we also recognize its inherent architectural limitation: Karpenter is strictly a Node Autoscaler. Karpenter excels at "bin-packing"—fitting your Pods onto nodes as tightly as possible. However, it operates blindly, accepting the resource requests defined in your YAML files as absolute truth.

- If your Pods are over-provisioned (and data suggests 75% of them are), Karpenter is simply packing "bloated" workloads efficiently.

- Result: You are effectively optimizing the packaging of waste.

🚀 The CloudPilot AI Advantage

Optimizing the container is just as critical as optimizing the instance. We bridge the gap by synchronizing the Pod layer with the Node layer:

- Pod First: Our Workload Autoscaler analyzes real-time usage and dynamically right-sizes your Pods (reducing requests to match reality).

- Node Second: Once the Pods are slimmed down, our Node Autoscaler (leveraging Karpenter’s speed) consolidates them onto the most cost-effective instances. This distinct two-layer approach ensures you aren't just optimizing where your workloads run, but how they run.

2. The "Long-Tail" Problem: Optimizing Heavy Java Applications

The Datadog Finding

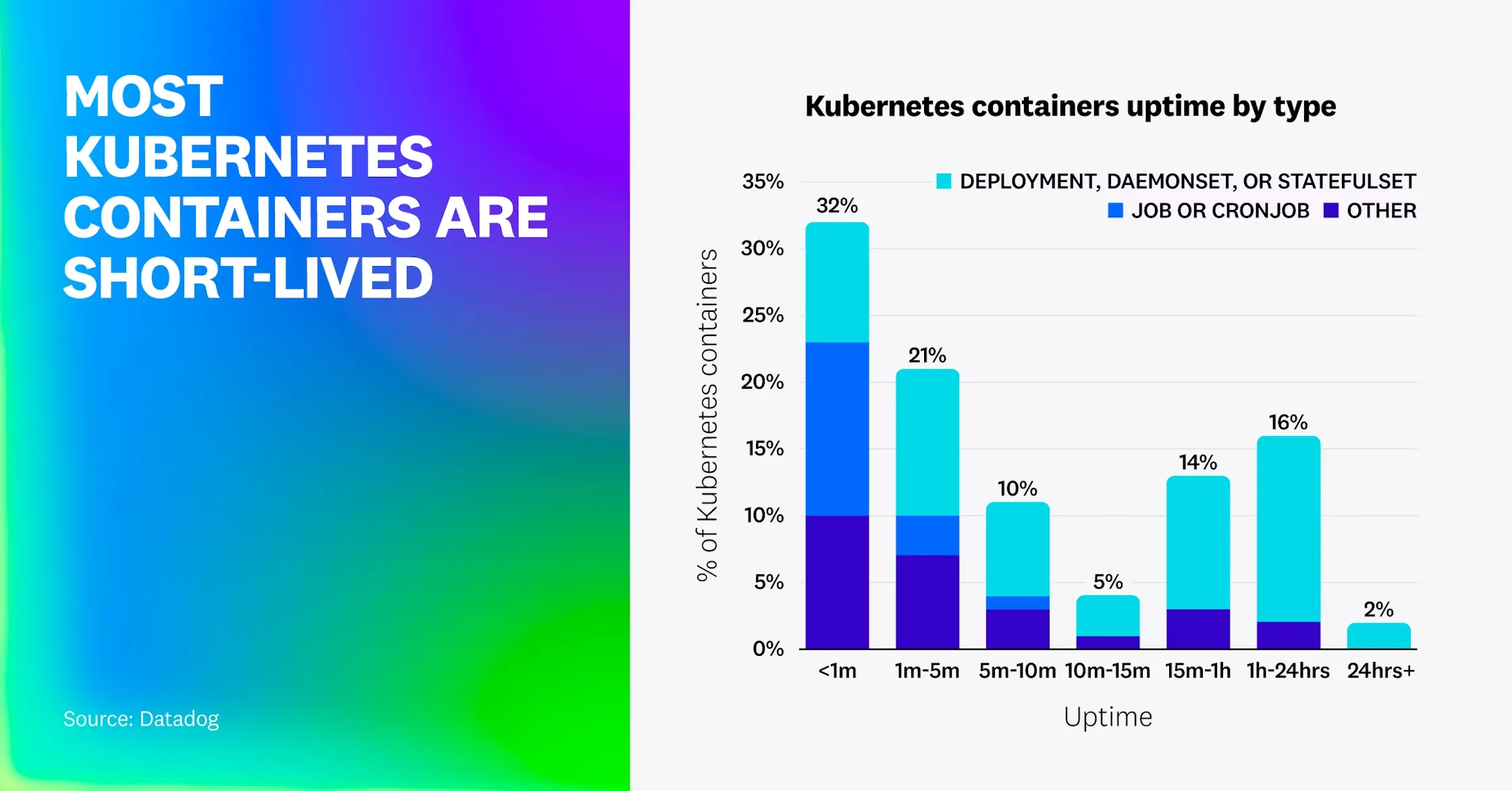

"Most Kubernetes containers are short-lived... almost two-thirds have an uptime under 10 minutes."

The Enterprise Reality

While Datadog highlights short-lived jobs, enterprise production environments often have a massive footprint of long-running, heavy applications—particularly Java. The challenge with Java is the "Startup vs. Runtime" gap:

- Startup: Needs massive resources (e.g., 8GB RAM, 4 vCPU) to initialize.

- Runtime: Needs a fraction of that (e.g., 2GB RAM) to run stably.

- The Waste: Standard autoscalers keep the request pegged at the peak startup requirement for the lifetime of the application.

🚀 Coming Late 2025: Intelligent JVM Resizing

To solve this persistent inefficiency, CloudPilot AI is introducing Intelligent JVM Resizing.

- How it works: Our upcoming feature will automatically adjust CPU/Memory requests based on JVM parameters and lifecycle phases.

- The Benefit: We will slash costs for enterprise Java workloads without risking OOMs (Out Of Memory errors) during initialization.

3. The ARM Opportunity: Stability Meets Savings

The Datadog Finding

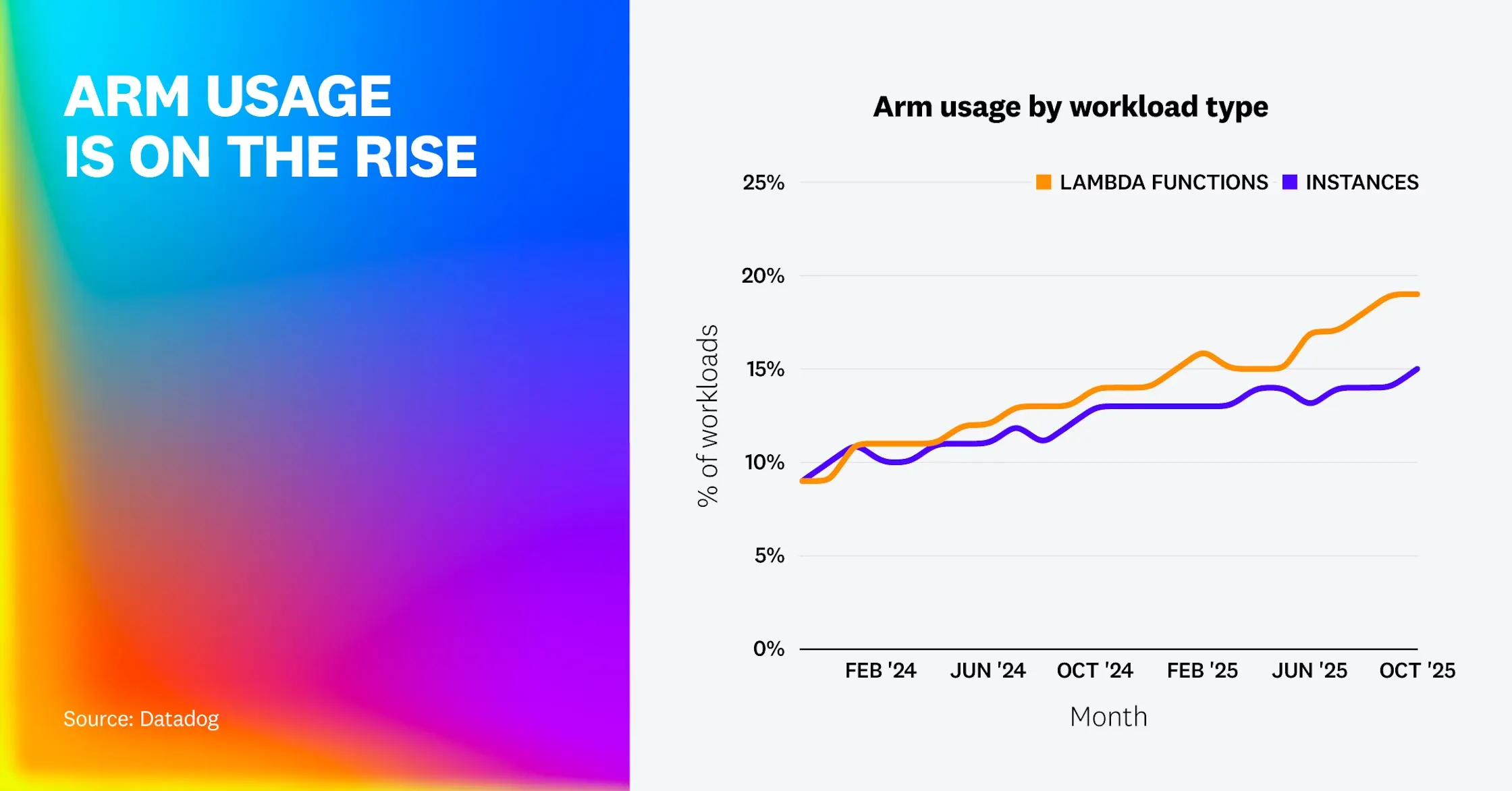

ARM usage continues to expand across cloud instances due to superior price-performance ratios.

The Hidden Benefit: Reliability

Our internal data reveals a second, often overlooked benefit: Reliability. According to CloudPilot AI’s Spot Insight, ARM-based Spot instances often exhibit significantly lower interruption rates compared to their x86 counterparts. Since the x86 market remains highly saturated and volatile, ARM offers a unique "sweet spot" of extreme cost efficiency and high availability. However, fear of capacity shortages often prevents teams from fully capitalizing on these savings.

🚀 Seamless Multi-Architecture Support

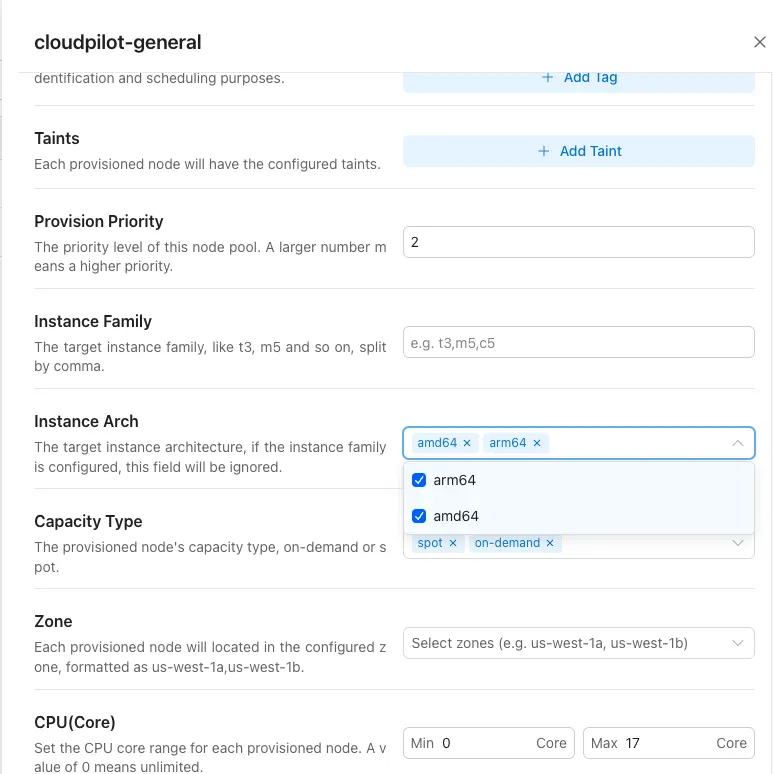

CloudPilot AI eliminates this risk through Multi-Architecture Orchestration. We don't just recommend ARM; we build the safety net required to use it.

- One-Click Enablement: In the CloudPilot AI console, customers can enable Multi-Architecture node pools instantly.

- Dynamic Mixing: Our autoscaler dynamically mixes x86 and ARM instances.

- Priority Logic: We prioritize the most stable and cost-effective ARM Spot instances while retaining the ability to fall back to x86 seamlessly if needed.

Conclusion

The Datadog report effectively diagnoses the symptoms of modern cloud infrastructure: low utilization, shifting tooling, and evolving architectures.

CloudPilot AI provides the cure. We believe that true efficiency requires more than just better node provisioning. By fusing deep Karpenter expertise with intelligent Workload Autoscaling and risk-free Spot orchestration, we close the gap between application demands and infrastructure supply.

With CloudPilot AI, your infrastructure stops being a source of waste and starts being a competitive advantage. It isn't just "running"—it is fully optimized.