Karpenter is a powerful Kubernetes Node Autoscaler built for flexibility, performance, and simplicity. It automatically provisions compute resources in response to unschedulable pods, enabling faster scaling and better utilization compared to traditional cluster autoscalers.

However, when used in production environments with diverse workloads and dynamic spot pricing, teams often encounter non-obvious tradeoffs where availability risks or cost inefficiencies emerge.

CloudPilot AI is designed to address these advanced operational challenges. As your SRE Agent inside Kubernetes, it builds on the core principles of autoscaling while adding intelligent, context-aware behaviors that improve service resilience and optimize cloud costs without adding operational complexity.

Here's a detailed comparison of how CloudPilot AI improves upon Karpenter's behavior in critical scenarios.

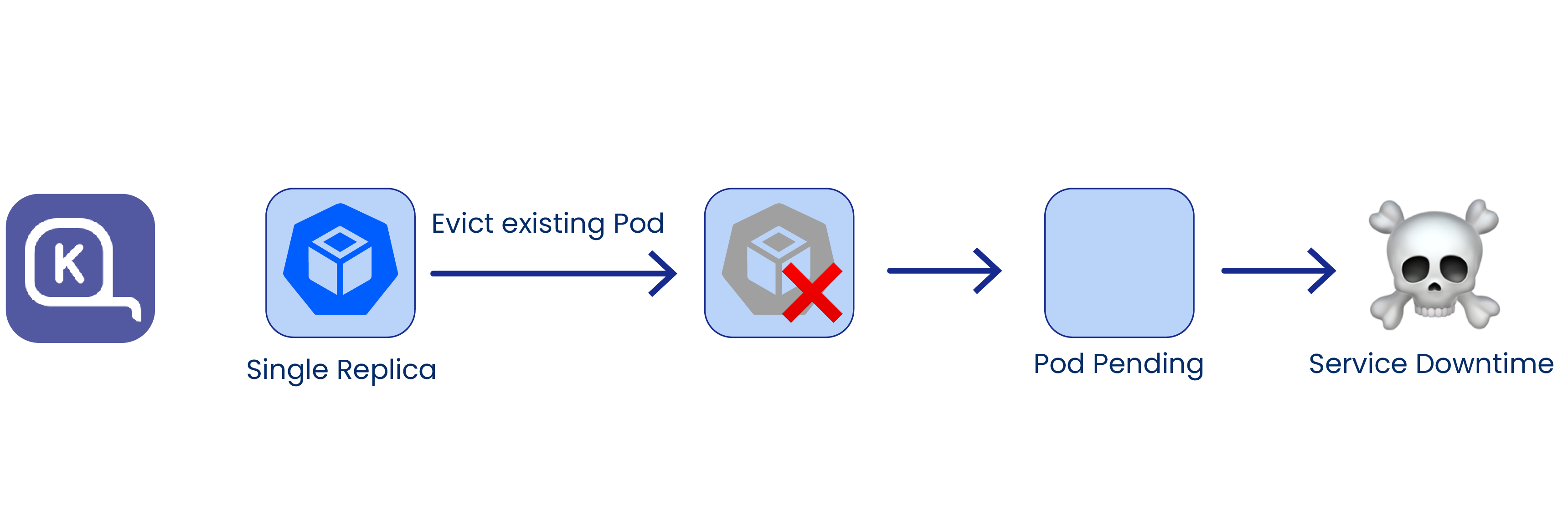

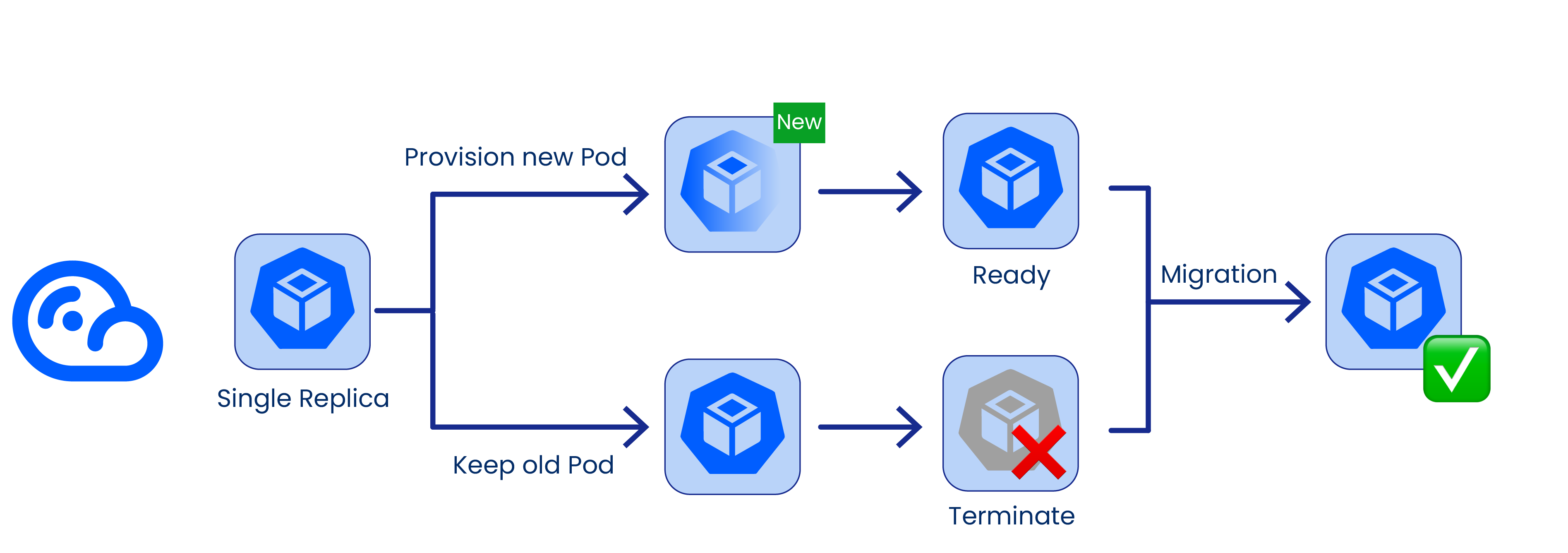

1. High Availability for Single Replica Workloads

Karpenter:

During consolidation or rebalancing, Karpenter may terminate a node that hosts a single-replica pod before the replacement is fully provisioned, leading to service downtime—even if briefly.

CloudPilot AI:

CloudPilot AI delays node termination until the new node is ready and the pod is confirmed running. This graceful handoff mechanism maintains availability for critical services like queues, databases, and stateful gateways, where even a few seconds of downtime can be unacceptable.

2. Predictive Spot Interruption Mitigation

Karpenter:

Karpenter reacts to the standard 2-minute spot interruption notice provided by AWS or other cloud providers. This may be insufficient in high-load situations, resulting in pod eviction delays and scheduling contention.

CloudPilot AI:

CloudPilot AI's Spot Prediction Engine uses predictive modeling to detect interruption signals up to 45 minutes in advance. It proactively drains and replaces high-risk nodes, dramatically reducing the chance of disruption during traffic spikes or deployment events.

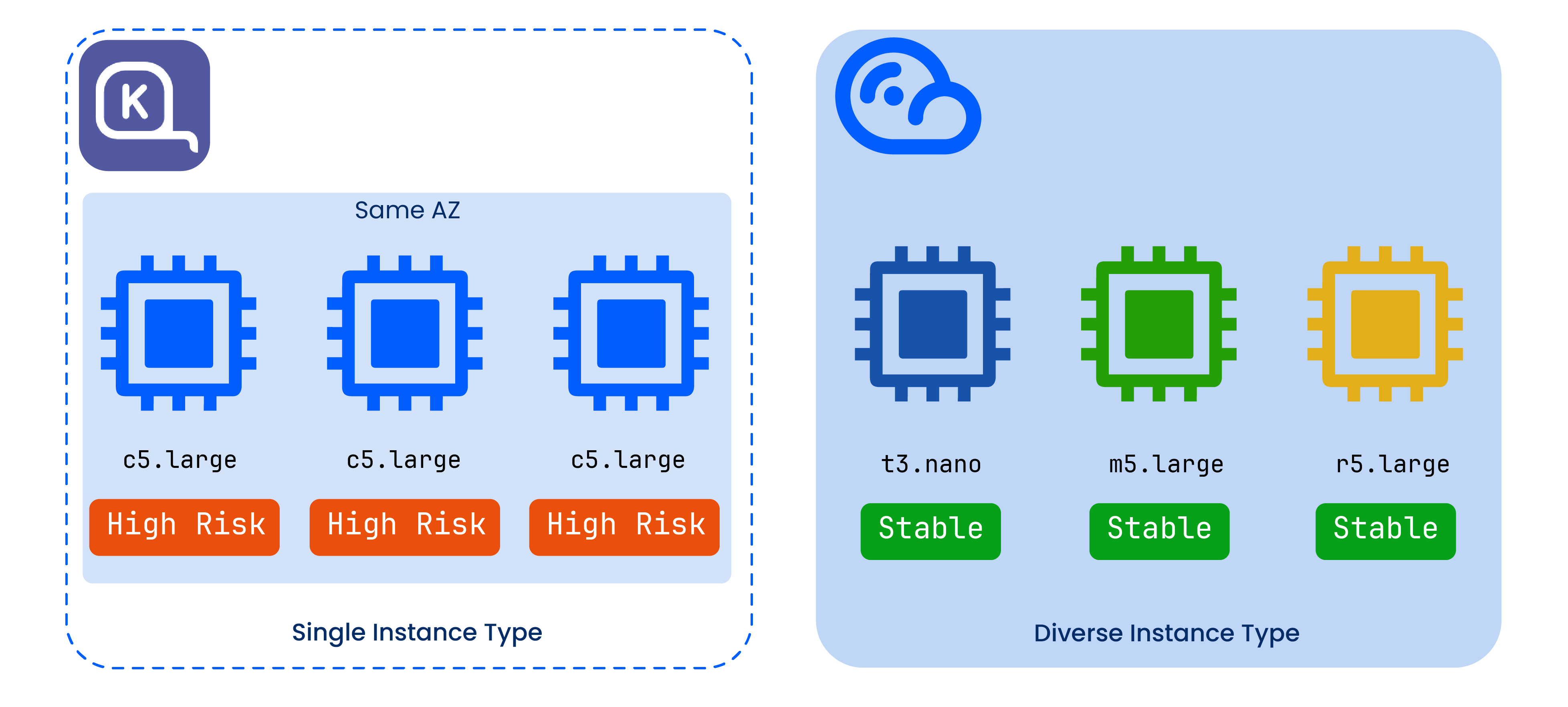

3. Instance Type Diversification for Greater Resilience

Karpenter:

Karpenter often selects a single instance type to binpack workloads for cost efficiency. While performant, this can lead to instance-type lock-in, which amplifies risk during spot price spikes or batch interruptions.

CloudPilot AI:

CloudPilot AI deliberately distributes workloads across multiple instance types and availability zones, balancing cost efficiency with resilience. This reduces over-reliance on any one spot market and improves cluster availability during market fluctuations.

4. Automatic Anti-Affinity Enforcement

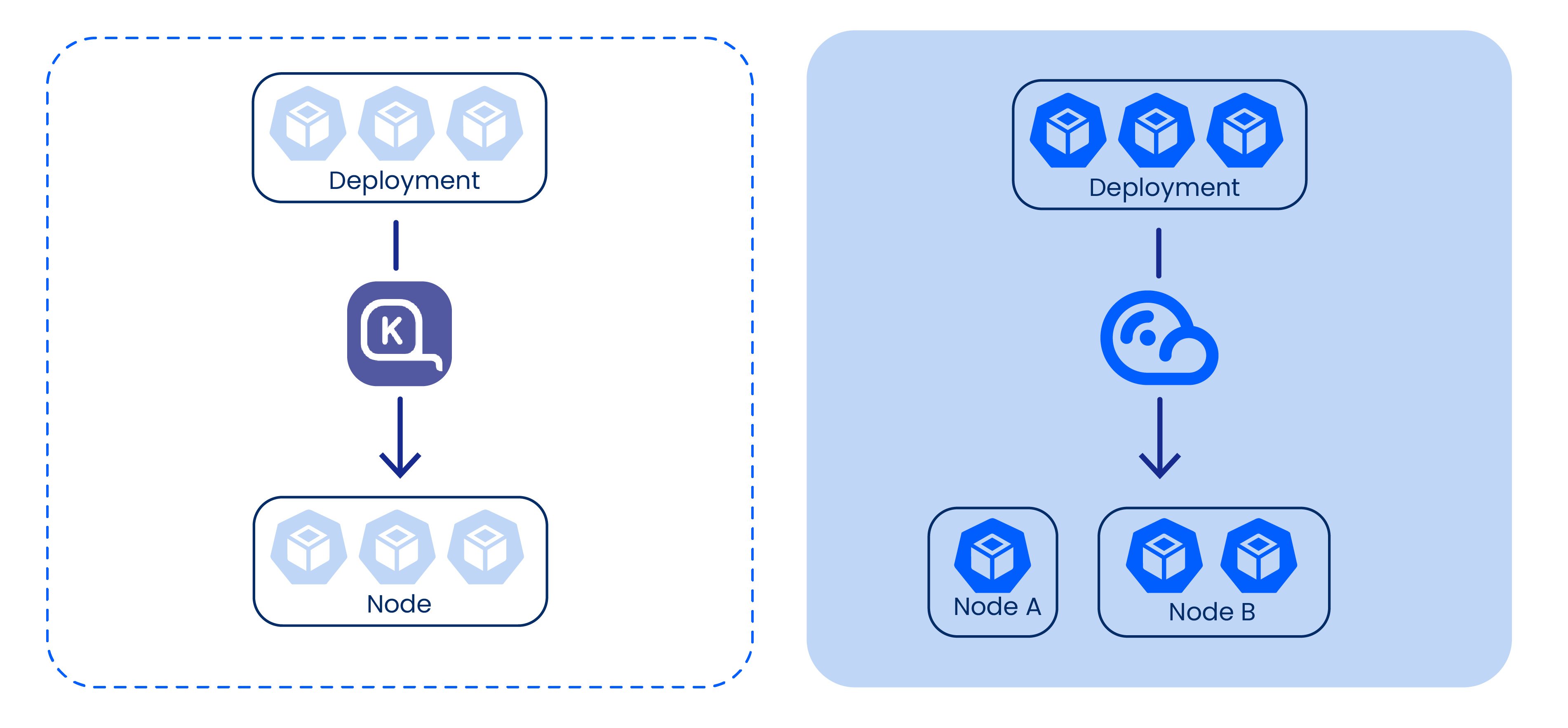

Karpenter:

Unless developers define pod anti-affinity, Karpenter may co-locate replicas of the same workload on the same node. This can create a single point of failure for multi-replica services.

CloudPilot AI:

CloudPilot AI enforces anti-affinity policies by default for replica workloads. It automatically ensures that replicas are spread across at least 2 nodes, helping teams achieve high availability without having to manage complex affinity rules manually.

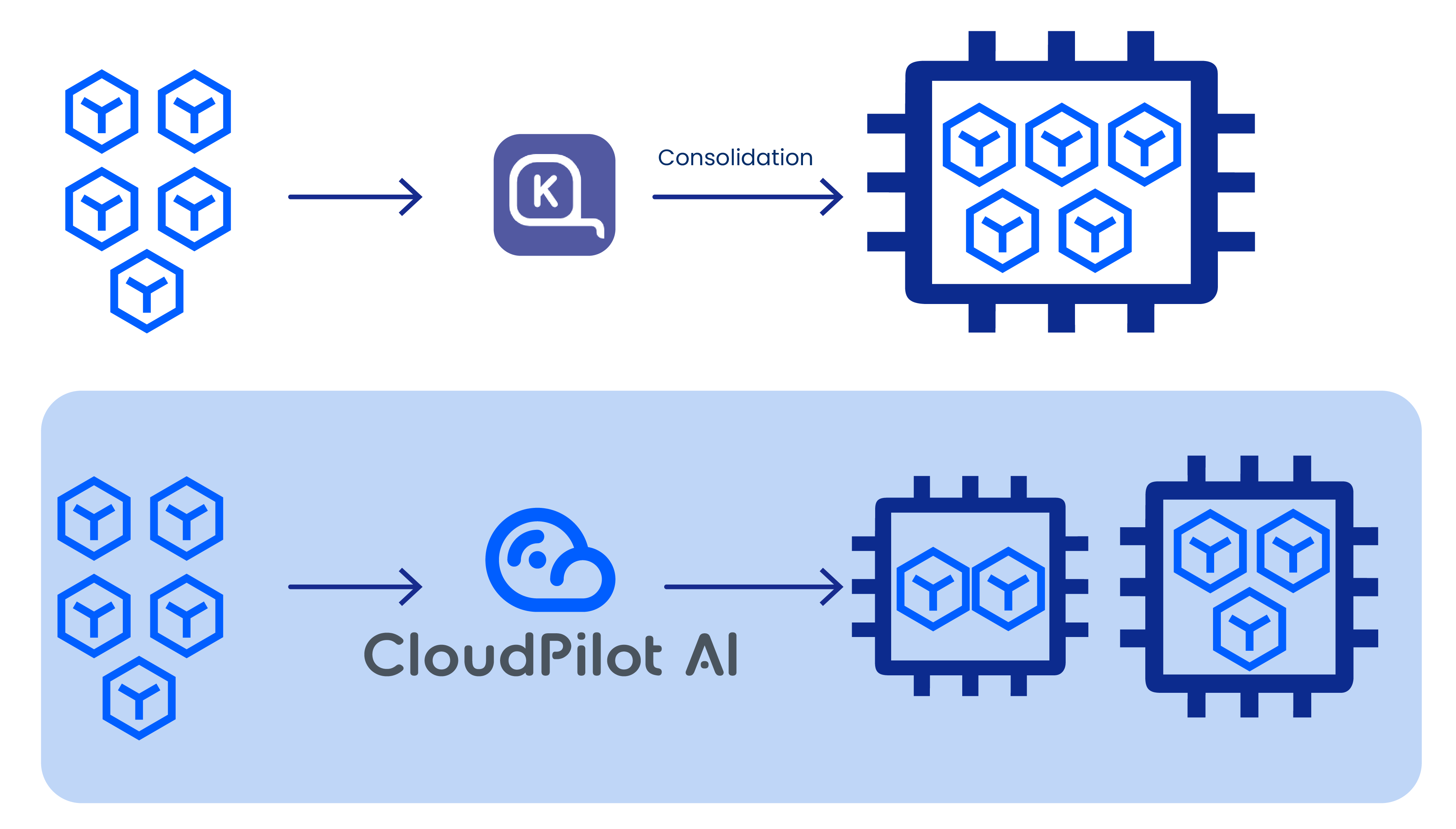

5. Balanced Workload Placement for Safer Consolidation

Karpenter:

Karpenter's binpacking strategy tends to concentrate workloads on fewer large nodes to minimize spend. But when these nodes are reclaimed or rebalanced, the resulting disruption can be significant.

CloudPilot AI:

CloudPilot AI uses a balance-first placement strategy, spreading workloads across nodes of various sizes to reduce the impact of node terminations and support safer consolidation events.

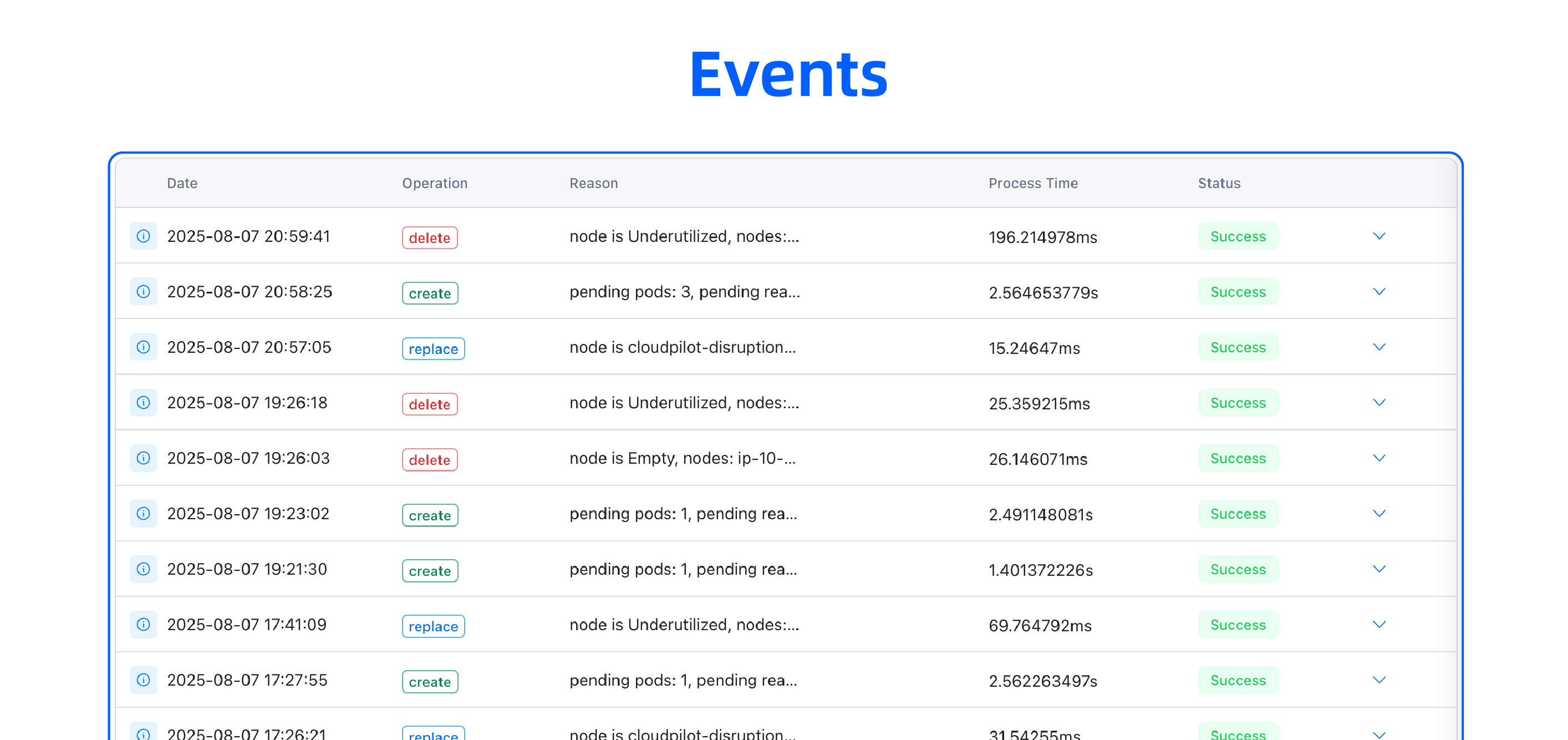

6. Node-level Event Logs

Karpenter:

While Karpenter does record event logs, they’re scattered and hard to interpret. They aren’t tied clearly to the node lifecycle, leaving you guessing where to start when troubleshooting.

CloudPilot AI:

CloudPilot AI organizes Karpenter's logs into clear, node-level event histories—including create, delete, and replace operations. Each event is enriched with status, reason, and raw data, giving you the context to troubleshoot faster and trust every automation decision.

7. Intelligent Scheduling for Persistent Volume Workloads

Karpenter:

If a Pod in a group depends on a Persistent Volume (PV) in a specific Availability Zone, Karpenter schedules the whole group in that zone. When the zone has limited capacity or higher prices, this can increase costs and risk service disruption.

CloudPilot AI:

CloudPilot AI detects which Pods depend on PVs and schedules only those in the required zone. The rest are placed in cheaper zones with better availability—reducing waste and avoiding scaling bottlenecks.

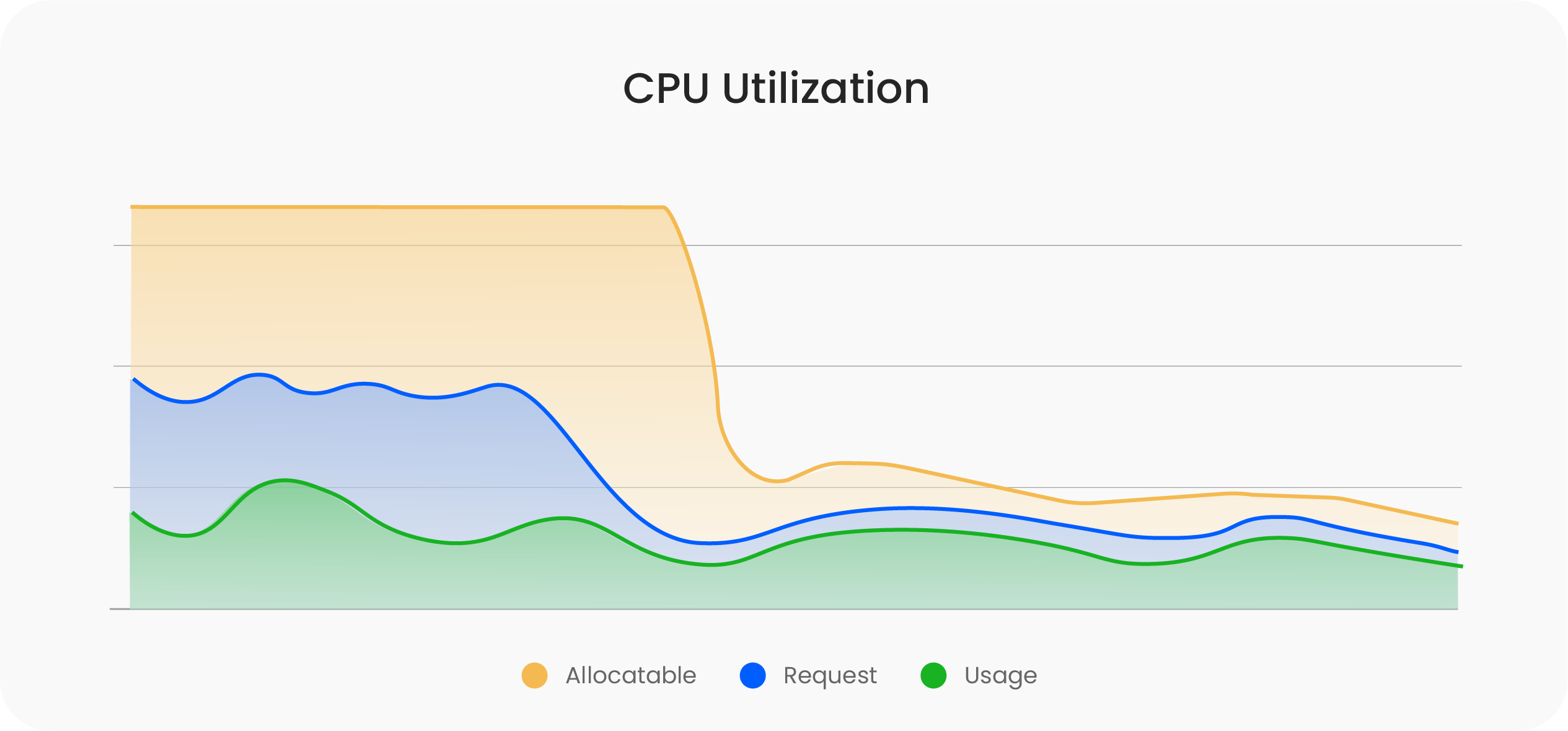

8. More Flexible Resource Allocation

Karpenter:

Karpenter doesn't take actual Pod resource usage or limits settings into account. If requests are misconfigured, it can lead to resource waste or increased risk of OOM (Out of Memory) errors during consolidation.

CloudPilot AI:

CloudPilot AI comes with built-in workload rightsizing. It continuously analyzes usage patterns and dynamically adjusts CPU and memory settings in real time, across any cloud or on-prem environment.

Instead of relying on manual configuration, CloudPilot AI uses historical data, live metrics, and safety buffers to proactively recommend and fine-tune resource requests — eliminating both underutilization and overprovisioning for peak performance and cost efficiency.

9.More Intuitive Visualization

Karpenter:

Karpenter relies on the command line for viewing resource states and activity logs. Information is fragmented and not easily visualized.

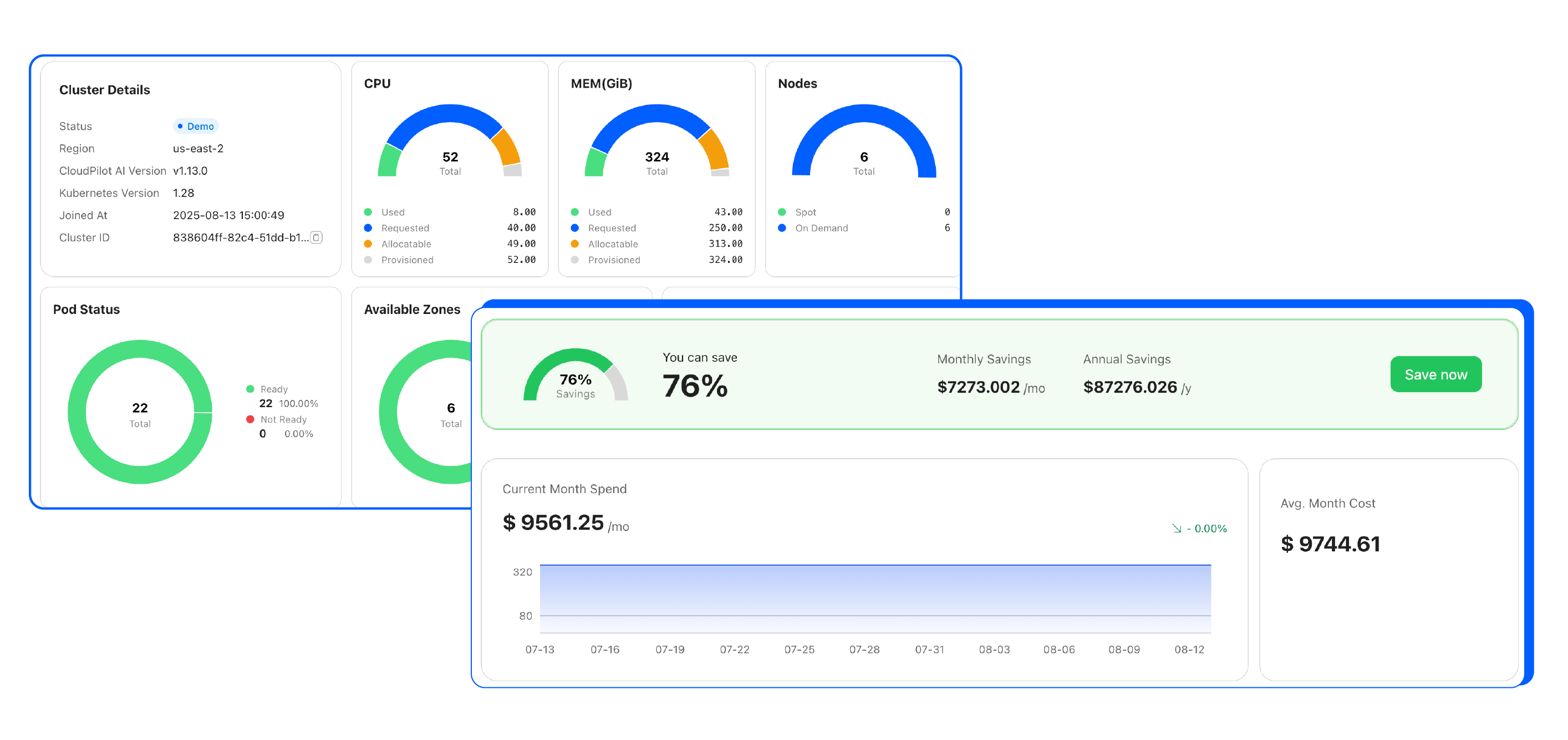

CloudPilot AI:

Comes with a real-time visual dashboard that consolidates resource changes, event logs, monthly spend, and historical cost trends—giving you a clear, centralized view of infrastructure activity at a glance.

Conclusion

Karpenter brings powerful, flexible autoscaling capabilities to Kubernetes. But for teams operating in fast-changing environments, where every minute of downtime or dollar spent matters, an additional layer of automation and intelligence is often required.

CloudPilot AI serves as your SRE Agent inside Kubernetes—building on the foundation of node autoscaling while solving the hidden challenges of production workloads. By combining predictive spot awareness, smart placement, and resilient scheduling, it helps organizations achieve both cloud cost optimization and autoscaling stability at scale.

Learn how CloudPilot AI can help your infrastructure scale safely, cost-effectively, and autonomously in minutes, not weeks.

Visit cloudpilot.ai to get started.